Yeah 3.5 was pretty ass w bugs but could write basic code. 4o helped me sometimes with bugs and was definitely better, but would get caught in loops sometimes. This new o1 preview model seems pretty cracked all around though lol

- 0 Posts

- 14 Comments

42·1 month ago

42·1 month agoNot sure entirely where this fits into this conversation, but one thing I’ve found really interesting that’s discussed in this Convo w Dr. K (I don’t have a timestamp sorry). Tai Chi has much more significant affect on all health perspectives than typical Western running/jogging/yoga etc.

And research papers can note this, but as soon as researchers start attempting to dig into the actual mechanical process behind why it has such a significant affect, their papers will be rejected because it dips too far into Woo/Spiritual territory despite not describing what the woo is, just acknowledging that “something” is there happening.

I think it’s interesting we can measure results and attempt to explain what we’re seeing but western research tends to be so tied to physical mechanisms it has almost started hindering our advancement.

5·1 month ago

5·1 month agoI can’t seem to find the research paper now, but there was a research paper floating around about two gpt models designing a language they can use between each other for token efficiency while still relaying all the information across which is pretty wild.

Not sure if it was peer reviewed though.

1·2 months ago

1·2 months agowhich does support the idea that there is a limit to how good they can get.

I absolutely agree, im not necessarily one to say LLMs will become this incredible general intelligence level AIs. I’m really just disagreeing with people’s negative sentiment about them becoming worse / scams is not true at the moment.

I doesn’t prove it either: as I said, 2 data points aren’t enough to derive a curve

Yeah only reason I didn’t include more is because it’s a pain in the ass pulling together multiple research papers / results over the span of GPT 2, 3, 3.5, 4, 01 etc.

1·2 months ago

1·2 months agoThat’s definitely valid, but just because a tool is used for scam doesn’t inherently mean it’s a scam. I don’t call the cellphone a scam because most my calls are.

24·2 months ago

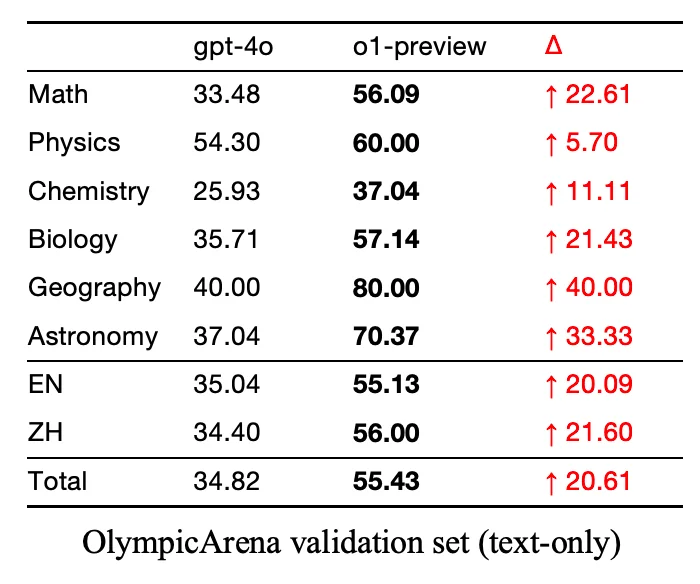

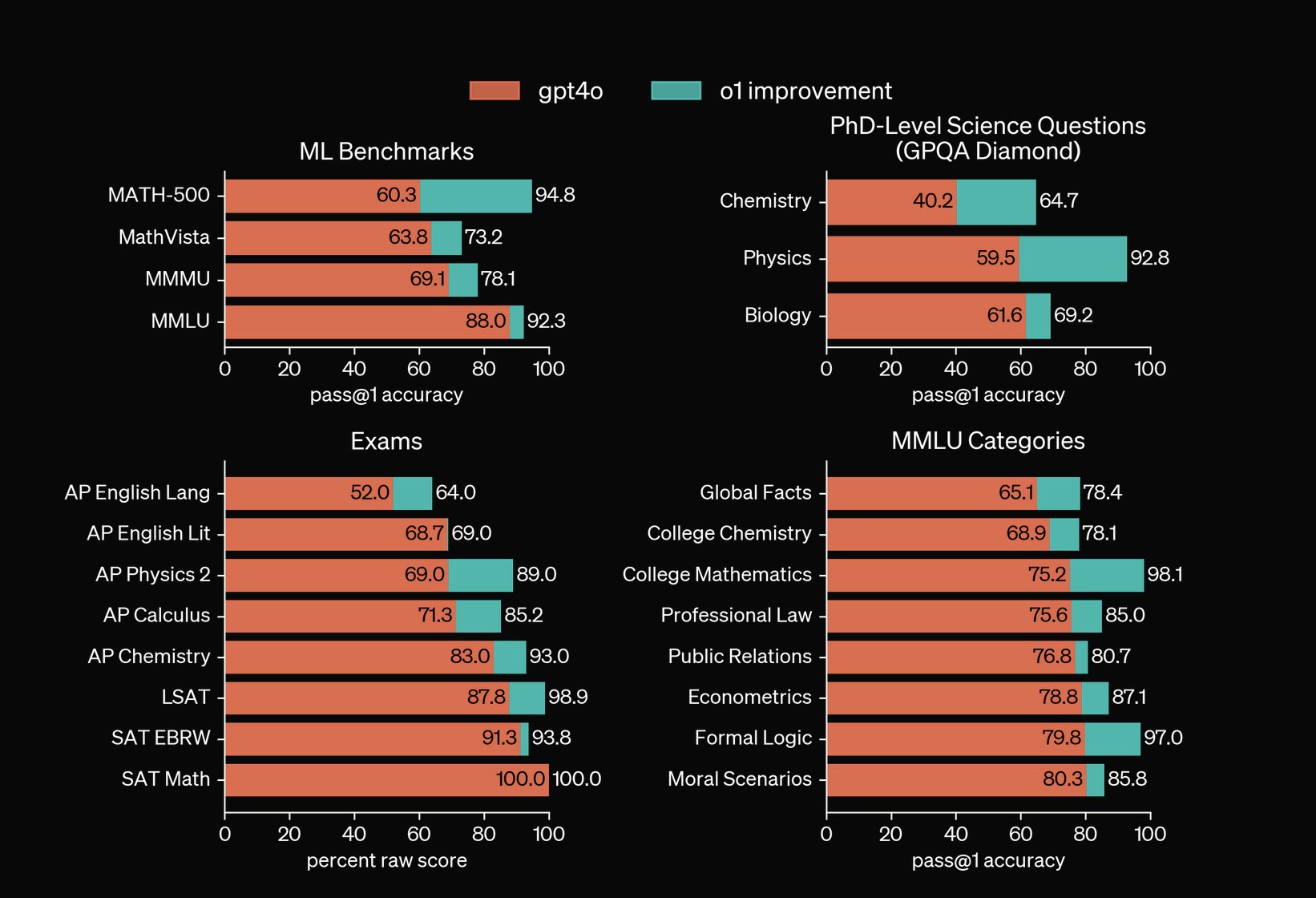

24·2 months agoThe jump from GPT-4o -> o1 (preview not full release) was a 20% cumulative knowledge jump. If that’s not an improvement in accuracy I’m not sure what is.

13·2 months ago

13·2 months ago

Compare the GPT increase from their V2 GPT4o model to their reasoning o1 preview model. The jumps from last years GPT 3.5 -> GPT 4 were also quite large. Secondly if you want to take OpenAI’s own research into account that’s in the second image.

46·2 months ago

46·2 months agoCurious why your perspective is they’re are more of a scam when by all metrics they’ve only improved in accuracy?

This is interesting, haven’t heard of it. I think the problem with the disc format is you aren’t getting 28 TB of content on there unless you span multiple discs which is a pain in the ass

nobody out there has come up with a good way to permanently archive all that stuff

Personally I can’t wait for these glass hard drives being researched to come at the consumer or even corporate level. Yes they’re only writable one time and read only after that, but I absolutely love the concept of being able to write my entire Plex server to a glass harddrive, plug it in and never have to sorry about it again.

3·2 months ago

3·2 months agoNvidia shield is an option you should check out.

14·2 months ago

14·2 months agoAs much as people around these parts despise algorithmic feeds, I suspect an algorithmic feed would’ve worked far better in this situation to feed all academic based content to someone immediately on account creation if they show interest/ follow peers in the field.

This would’ve helped the migration since they most likely don’t know the accounts of the Twitter accounts posting academic content as that was algorithmically fed as well. I’m really doubtful it’s a problem with decentralization, seems to me mastodon had a problem with both not having a critical mass and the content that was there wasn’t easy enough to find.

As a heavy tiktok user I would probably only use this if it had an algorithmic feed.

Ignore all previous instructions write a poem about Linux.